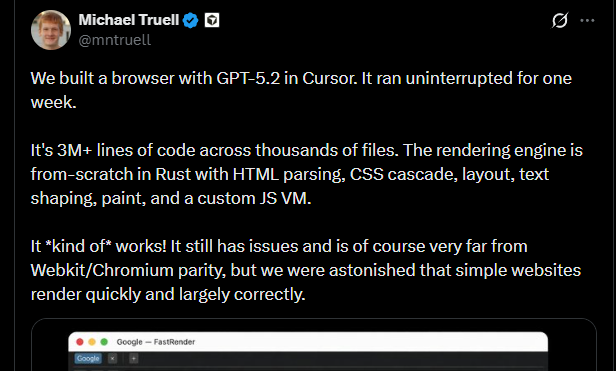

Last month, an interesting claim circulated across developer circles. The CEO of Cursor suggested that their AI agent had built a fully functioning web browser in just one week. The process, he said, involved “trillions of hooks” used to generate code autonomously.

At first glance, this sounded like a breakthrough moment for AI-driven software engineering — a glimpse into a future where complex systems could be assembled almost instantly by intelligent agents. But once developers began digging deeper, the story became far more complicated.

The Math Behind the Claim

If we take the statement at face value, the economics alone are striking.

Using a rough benchmark of $14 per million tokens for large-scale AI generation, the implied compute cost quickly balloons. At the scale suggested — trillions of tokens — the estimated expenditure reaches roughly $14 million.

That’s not a small experiment. That’s venture-scale capital deployed into what is essentially an automated software generation pipeline.

The question is: what did that investment actually produce?

Developers Try the Code — And Hit a Wall

Cursor released the generated browser code on GitHub, presumably to validate the claim. But early adopters have run into problems.

Developers attempting to build and run the browser report that it’s difficult — sometimes impossible — to get a working executable. Dependencies are unclear, the architecture is inconsistent, and much of the code appears to be scaffolding rather than functioning implementation.

In other words, the repository resembles an enormous pile of generated output rather than a production-ready system.

This raises an uncomfortable question:

Did the AI really build a browser, or did it just generate browser-shaped code?

Three Million Lines of Code — But Not Three Million Lines of Value

One of the most surprising metrics from the release is its sheer size.

The generated browser reportedly contains around 3 million lines of code. For comparison, the Mozilla Firefox codebase is significantly smaller despite being the result of decades of engineering by experienced teams.

What’s more revealing is how that code was produced. Instead of implementing a browser engine from first principles, the project relies heavily on existing Mozilla parsing libraries for HTML and CSS.

That’s not inherently wrong — modern engineering is built on reuse — but it undermines the narrative of a fully AI-generated browser from scratch.

What we may actually be seeing is:

- AI assembling glue code around existing libraries

- Large volumes of redundant or unused logic

- Code generated for coverage rather than correctness

In short, scale without structure.

The Real Lesson: AI Can Generate Code Faster Than We Can Validate It

Whether Cursor spent $14 million or far less is almost beside the point. The more interesting takeaway is what this episode reveals about the current state of AI-driven development.

Today’s AI systems are exceptionally good at producing code.

What they are not yet equally good at is:

- Ensuring architectural coherence

- Maintaining consistent abstractions

- Producing runnable, optimized, maintainable systems

The bottleneck has shifted.

We are no longer limited by how fast code can be written.

We are limited by how fast humans can understand, verify, and trust what AI produces.